Creating Redundancy with ZFS Migration

Use Case

As part of my move to California, I wanted to backup my data from one TrueNAS server running on Proxmox to another TrueNAS instance on a different Proxmox server. This allows me to have at least two copies of the same data on two physically separate servers. Not only was this a way to replicate data once, but it will serve as my first step to a schedule backup plan on separate servers to establish some data redundancy in my home lab. More importantly, the server versions for my TrueNAS and Proxmox server are out-of-date, and need to be upgraded. As a way to maintain data availability without downtime during the upgrade, I wanted to backup and move the data off the server, while other hosts can still access the data.

What am I doing

As ZFS is the foundational technology of TrueNAS, I will be using the core benefits of that filesystem to create a snapshot on the source server and replicate the snapshot to another server via SSH. ZFS snapshots are crucial because they maintain the data integrity through checksums and properties of the entire data pool. Furthermore, the transfer of data is a stream and requires minimal downtime.

Steps

First, I had to set up the plumbing underneath (network connectivity). This will vary depending on the network. For my case, there is a Vyos router that sits between the networks, and I had to punch a hole through that firewall to allow SSH connectivity between the servers.

I added the same accounts and credentials on the receiving TrueNAS server, so that I could replicate the ZFS data and properties.

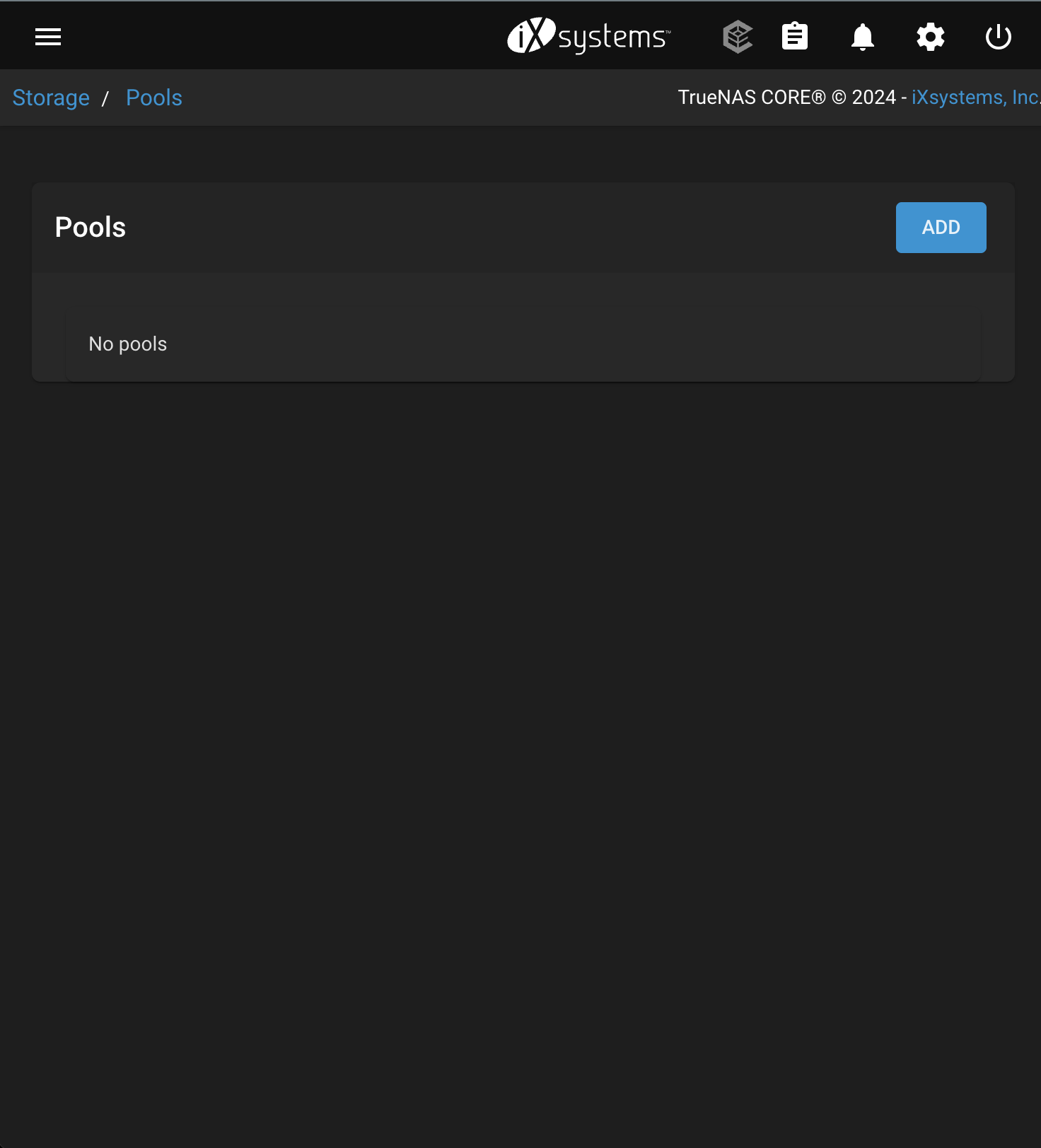

Add data pools on the receiving end.

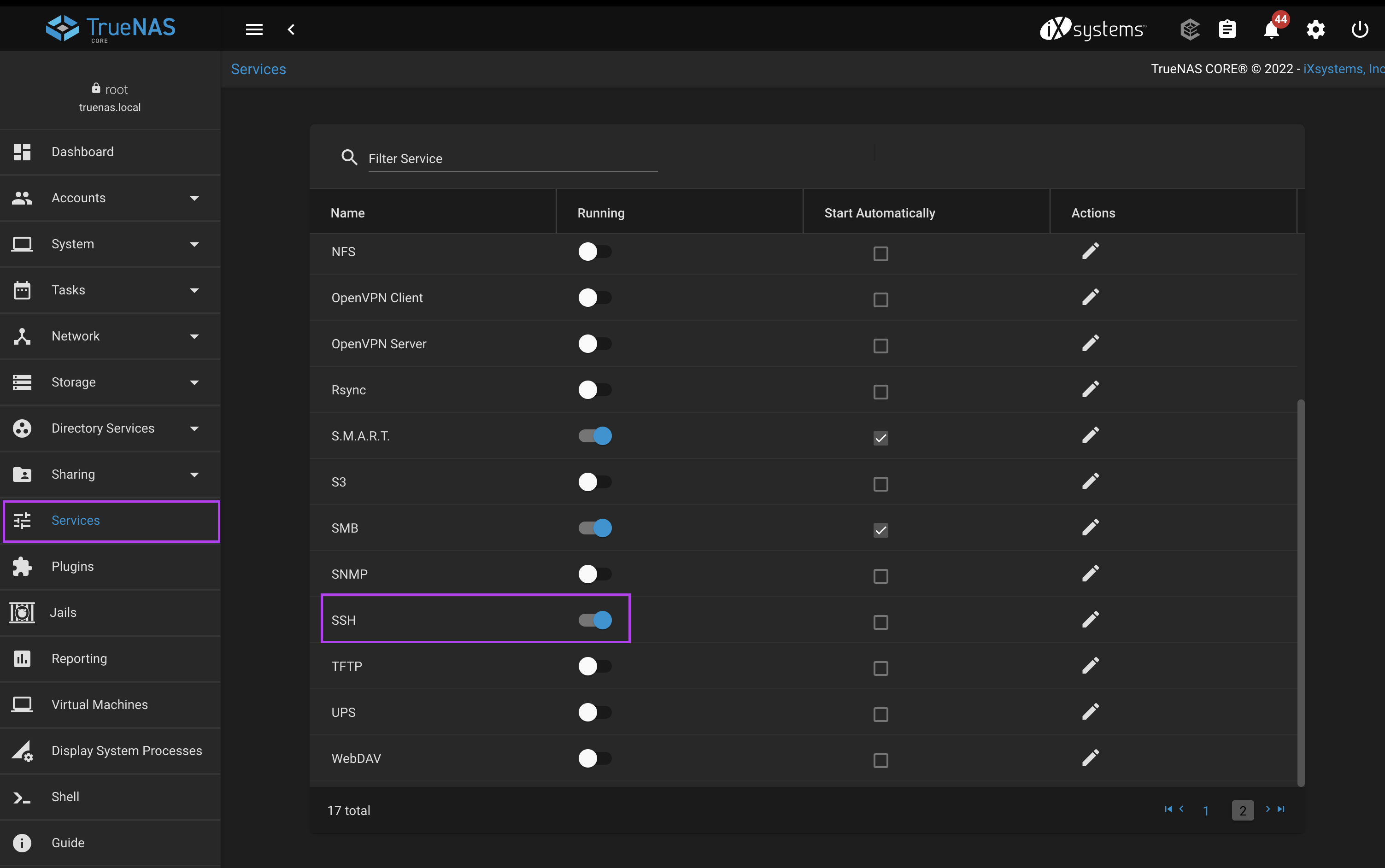

Enable SSH on both servers.

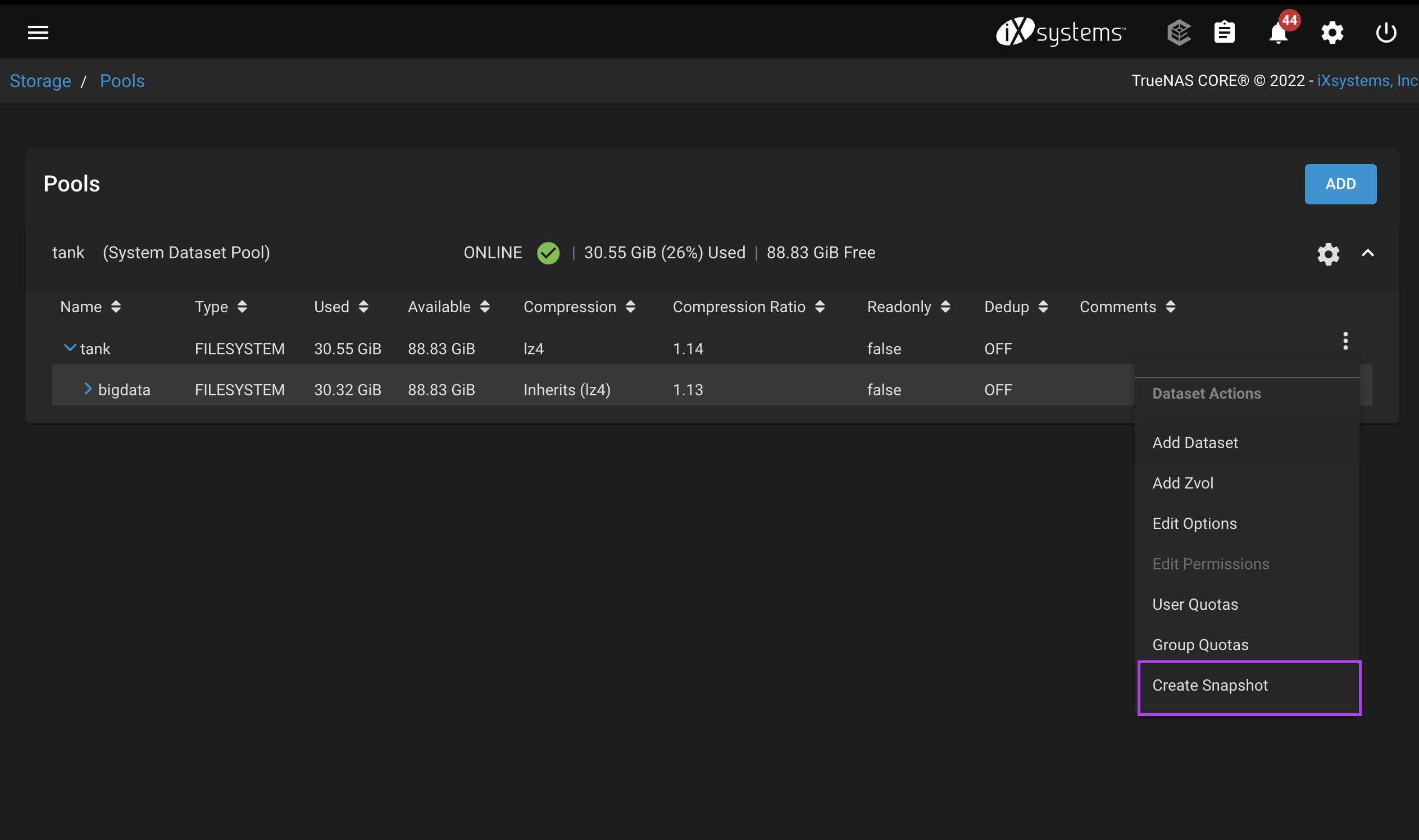

Create a ZFS snapshot on source server.

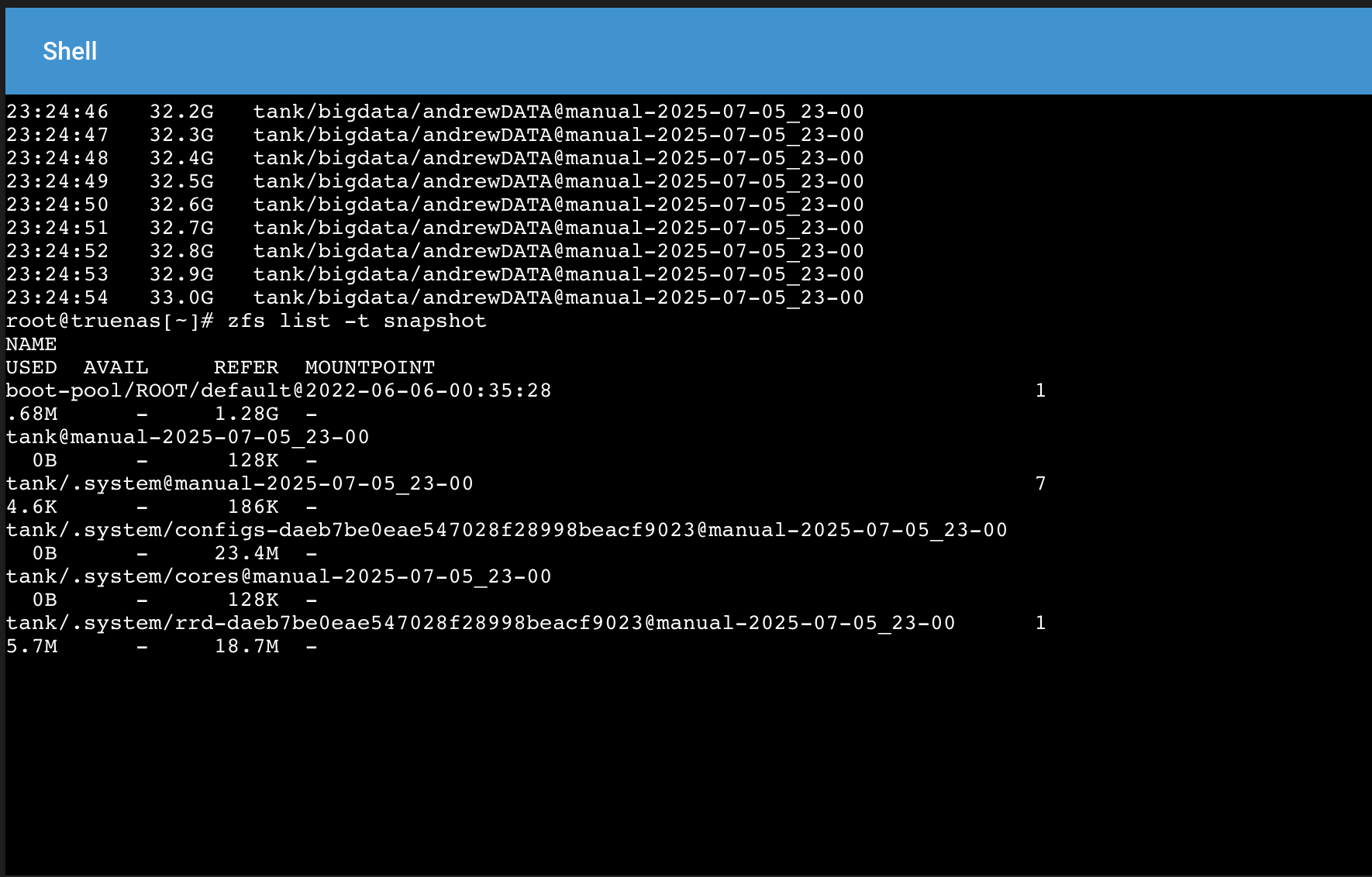

List snapshots to verify.

Send snapshot:

zfs send -Rv tank/bigdata@manual-2025-07-07_23-07 | ssh root@10.12.21.3 zfs receive -Fdu tank

Set share settings on the new pool on the destination server so that clients can access the data:

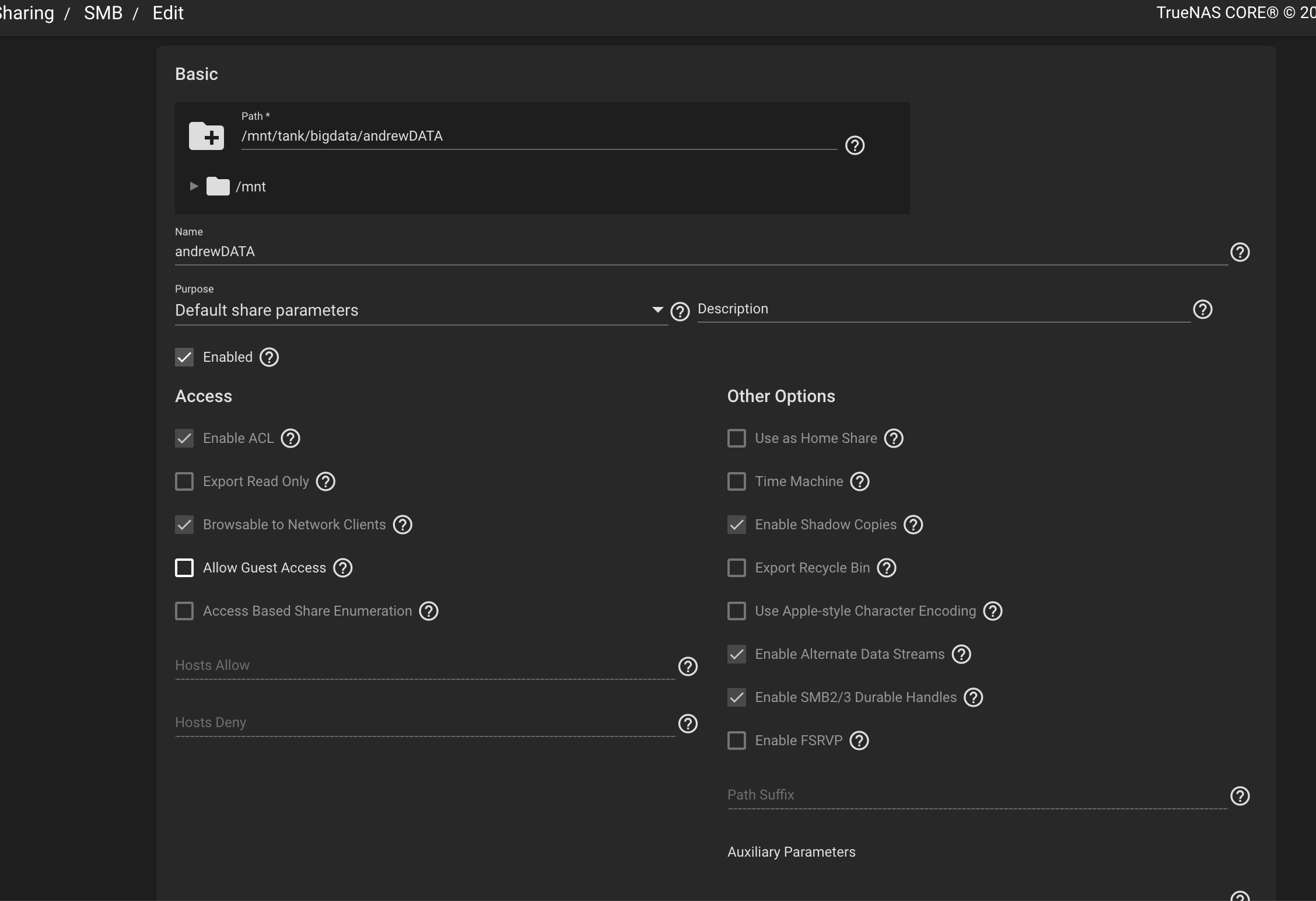

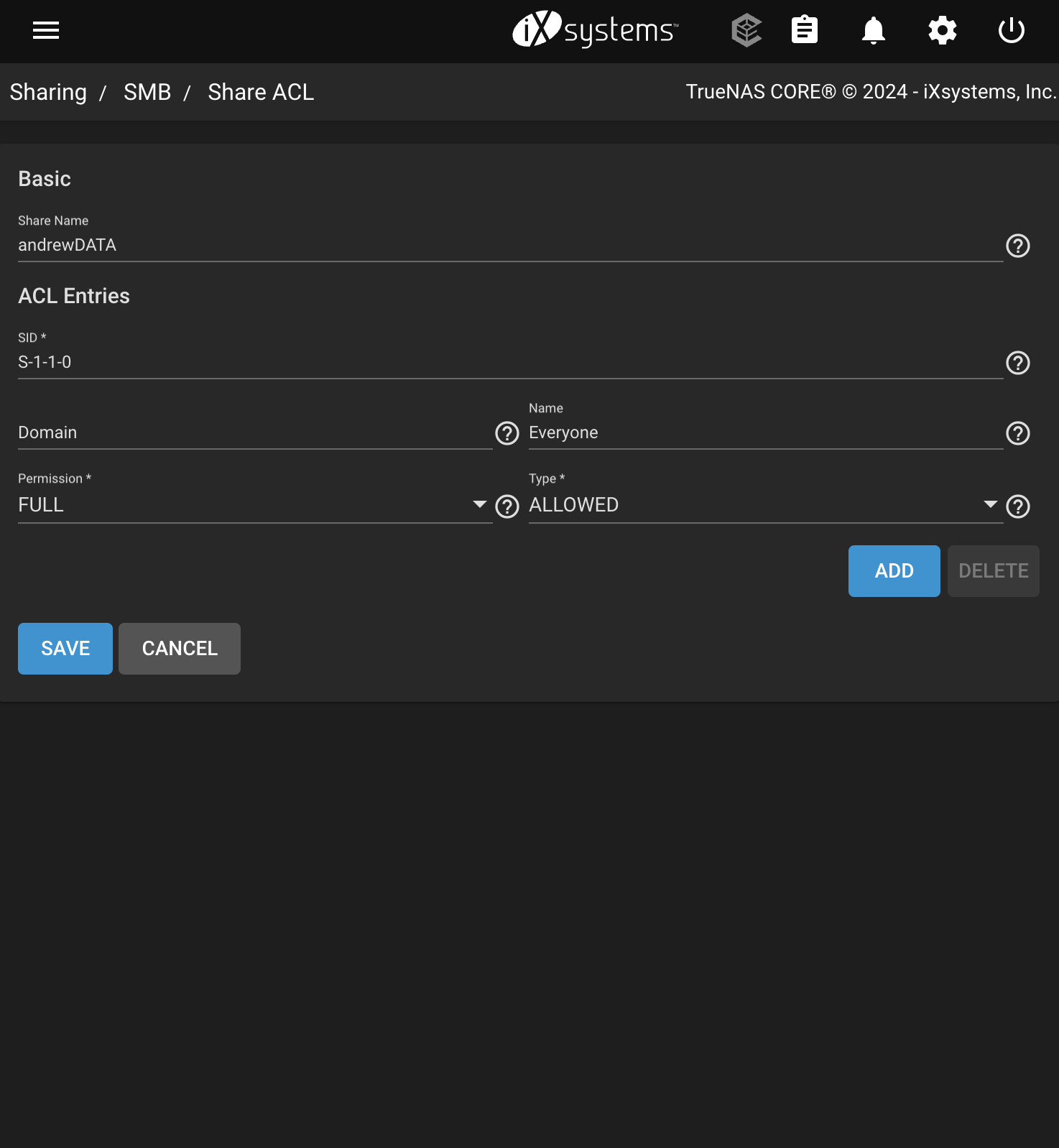

Share settings:

Share ACL

File system ACL

check to see if dataset is mounted

zfs list -o name,mountpoint,mounted tank/bigdata/andrewDATA

if no, mount

zfs mount /tank/bigdata/andrewDATA

root@truenas[~]# zfs list -o name,mountpoint,mounted tank/bigdata/andrewDATA

NAME MOUNTPOINT MOUNTED

tank/bigdata/andrewDATA /mnt/tank/bigdata/andrewDATA yes

What I Learned

Replication for ZFS was straight forward. It took a while for me to start the replication process as I suspected a lot would be involved – and simply procrastinated. But replication over SSH was simple, and the snapshots were portable units across different versions of TrueNAS, which helped with the ease of migrating data.

Takeaways

This may not be the best way to create duplicates of data (in terms of data integrity and certainly not practicing the best security), but it allowed me to start the process of having multiple copies of data before I had to pack my servers away!

What Next?

Upgrading TrueNAS and Proxmox.